This article is compatible with the latest version of Silverlight.

Introduction

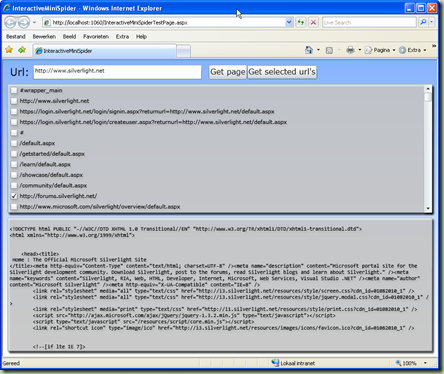

In this article we’ll look at building a spider, which can load web pages and extract links. It will then allow the user to select which links it wants to retrieve, which adds more links to the list. It will look something like this:

Building it will involve using a two-way DOM Bridge to interact with JQuery and building some parsing mechanism to use on the HTML we retrieve. Finally we’ll have a short look at getting Uri objects selectable in a ListBox.

You can download the source code for this article here.

Step 1: Getting pages from the web

The first thing I worked out was how to get paged from the web. As you may very well know, Silverlight 3 provides us with several options to communicate over the web. However, they all share common behavior when it comes to security. In other words they all respect cross-domain security. Normally this is a good thing, but when building a spider, that’s no good. You want to be able to get pages from anywhere. Two alternatives are available that allow us to get around the cross-domain policy:

- Build a web service which can serve as a proxy for the requests

- Use Javascript and the DOM bridge to leverage the browser for the requests

As I wanted to leverage the clients in loading the pages, limiting the strain on the servers bandwidth, I decided to go with the second option. To make live easier on myself, in terms of browser compatibility, I choose to use JQuery to do the requests.

Step 1.1: Building the Javascript for JQuery

Although this is not really part of Silverlight it’s an important part of the code and an understanding of how it works is important for the interaction through the DOM bridge. Here is the script I’ve included at the start of the BODY of the aspx page that hosts the Silverlight application:

<script type="text/javascript" id="spiderScript">

function getUrlAsync(url) {

$.ajax({

success: getUrlSuccess,

error: getUrlError,

url: url

});

}

function getUrlSuccess(data, textStatus) {

var plugin = document.getElementById("slPlugin");

plugin.Content.Spider.getUrlCompleted(data, textStatus, null);

}

function getUrlError(XMLHttpRequest, textStatus, errorThrown) {

alert(XMLHttpRequest + '\ntextStatus: ' + textStatus + '\nerror:' + errorThrown);

}

</script>

As you can see there are three functions in there. The first function is what we want to call from Silverlight. It initiates a request for a specific URL. The other two functions are basically callbacks for JQuery. getUrlSuccess is called whenever a page was successfully retrieved and getUrlError is called whenever something went wrong.

Looking at getUrlSuccess we can see that it calls back into Silverlight with the resulting page. It’s worth noting that I obviously added an id attribute to the object tag containing the Silverlight plugin with the value slPlugin in order to be able to get to it from this function.

Step 1.2: Accessing Javascript from Silverlight

In order to abstract the spider code from the rest of the Silverlight code, I created a separate Spider class. This means any calls to the Javascript code and back have to go through this class. The first thing to do is to make sure this class is accessible from Javascript. In order to do this we must register it with the browser using the HtmlPage class. I placed the following line in the Spider constructor:

HtmlPage.RegisterScriptableObject("Spider", this);

Please be aware that this does mean we can only have a single instance of the Spider class, as otherwise the name of the object would be used multiple times, which would obviously result in an error.

The next step is to initiate a page request. In order to do this, I created a public method called GetUrlAsync, which calles the Javascript function getUrlAsync (I know, this can get confusion very quickly):

public void GetUrlAsync(Uri uri)

{

if (_status == SpiderStatus.Inactive)

{

_status = SpiderStatus.SingleUri;

}

HtmlPage.Window.Invoke("getUrlAsync", uri.ToString());

}

As you can see, I first change the internal status of the Spider. We’ll come back to that later on. Next we call the Javascript function getUrlAsync. To do this we again use the HtmlPage class, which has a static property Window. This is an HtmlWindow instance that allows DOM access to the current browser window. From there we can call the Invoke method with the function name and any parameters we need to pass. In this case we pass the URL as a string.

Step 1.3: Facilitating a callback

If we now refer back to the Javascript, we see that it expects the Spider object to have a method called getUrlCompleted. This function is also passed three arguments. So here is how that looks in the Spider class:

[ScriptableMember]

public void getUrlCompleted(string result, object error)

{

if (_status == SpiderStatus.SingleUri)

{

_status = SpiderStatus.Inactive;

DoGetUrlCompleted(result, null);

}

else if (_status == SpiderStatus.UriList)

{

_htmlPages.Add(result);

HandleNextUri();

}

}

The first thing to note is the ScriptableMember attribute that precedes the method. This makes the method available in the DOM model.

The second thing to note is that although we passed this method three parameters in the Javascript, we only have two parameters here. This illustrates that when it comes to calling into Silverlight, the Javascript rules still apply. This means we can pass less or, as in this case, more parameters to a method than are included in the actual signature. In this case any parameters that are to many for the signature are simply discarded. If parameters are not passed, that were expected are given the default .NET value. In most cases this means they get a null value, but for non null-able types, this would be the default value for that type. Therefore it is important to check these parameters before use in any production code.

In the method, we can also see, I’ve included more then one mode of operation for the Spider class. The first one, handling a single URL, is handled first. It simply resets the status to Inactive and triggers an event in order to notify any code that it completed getting the page. The second mode of operation is to get more then one URL. In that case all the code does is add the resulting page to a list and move on to the next URL. The two methods that do that look like this:

public void GetUrlsFromEnumerableAsync(IEnumerable<Uri> uriList)

{

if (_status != SpiderStatus.Inactive)

{

return;

}

_htmlPages.Clear();

_status = SpiderStatus.UriList;

foreach (Uri uri in uriList)

{

if (!_uriQueue.Contains(uri))

{

_uriQueue.Enqueue(uri);

}

}

HandleNextUri();

}

private void HandleNextUri()

{

if (_uriQueue.Count > 0)

{

GetUrlAsync(_uriQueue.Dequeue());

}

else

{

_status = SpiderStatus.Inactive;

DoGetUrlsFromEnumerableCompleted(_htmlPages);

}

}

Step 2: Getting URL’s from an HTML page

Now that we have the HTML, it is time to extract other URL’s from that HTML. I chose to do this with some simple string parsing code. Many discussions are ongoing on the web about using regular expressions for parsing HTML. In my experience this might work, but including all the edge cases that may occur is very complicated. Here is my parsing code:

private static string[] GetLinks(string html)

{

List<string> result = new List<string>();

string document = html;

while (document.Length > 0)

{

int startIndex = document.IndexOf("<a");

if (startIndex < 0)

{

break;

}

int endIndex = document.IndexOf("</a>", startIndex);

if (startIndex >= 0 && endIndex > 0)

{

string link = document.Substring(startIndex, endIndex + 4 - startIndex);

result.Add(link);

document = document.Substring(endIndex + 4);

}

else

{

document = string.Empty;

}

}

return result.ToArray();

}

private static SelectableUri GetUriFromLink(string link)

{

int startIndex = link.IndexOf("href=") + 5;

int endIndex = -1;

if (link[startIndex] == '"')

{

startIndex++;

endIndex = link.IndexOf('"', startIndex);

}

else

{

endIndex = link.IndexOf('>', startIndex + 5);

if (endIndex > link.IndexOf(' ', startIndex + 5))

{

endIndex = link.IndexOf(' ', startIndex + 5);

}

}

if (startIndex >= 0 && endIndex > 0)

{

string uri = link.Substring(startIndex, endIndex - startIndex);

return new SelectableUri(uri, UriKind.RelativeOrAbsolute);

}

return null;

}

public ObservableCollection<SelectableUri> GetUrisFromHtml(string html)

{

ObservableCollection<SelectableUri> result = new ObservableCollection<SelectableUri>();

string[] links = GetLinks(html);

foreach (string link in links)

{

SelectableUri uri = GetUriFromLink(link);

if (uri != null)

{

result.Add(uri);

}

}

return result;

}

As you can see, there is nothing special going on, just some simple string parsing. The last method, GetUrisFromHtml, does exactly that. It takes HTML code in the form of a string and returns an ObservableCollection<SelectableUri> (so you can databind to it). It does this by calling GetLinks, which takes HTML code and returns a string[] with links. These include the anchor tag. Next the Uri is extracted from each of these links by the GetUriFromLink method.

I choose to use this two step approach for two reasons. The first is abstraction. By not putting this into a single method, it keeps things cleaner and easier to read and understand. The second reason is that by abstracting it, I can also build methods that extract meta data about the Uri’s from the links in a later stage.

Step 3: Make the URL’s available to the user for selection

So now that we have a collection of SelectableUri objects, allowing the user to select them is easy:

resultTextBox.Text = e.Result;

_uris = _spider.GetUrisFromHtml(e.Result);

urlSelectionListBox.ItemsSource = _uris;

And the XAML:

<StackPanel Orientation="Horizontal" Margin="0,10,0,0">

<TextBlock Text="Url: " FontSize="21.333" Margin="15,0,0,0" />

<TextBox x:Name="urlTextBox"

Width="350" Margin="0,0,15,0" ToolTipService.ToolTip="Type your first Url here"/>

<Button x:Name="getUrlButton" Content="Get page" Click="getUrlButton_Click" FontSize="16" />

<Button x:Name="getSelectedUrlsButton" Content="Get selected url's" Click="getSelectedUrlsButton_Click" FontSize="16" />

</StackPanel>

<ListBox x:Name="urlSelectionListBox" Grid.Row="1" Background="#B2FFFFFF" Margin="10" ToolTipService.ToolTip="Select any extra Url's here">

<ListBox.Effect>

<DropShadowEffect/>

</ListBox.Effect>

<ListBox.ItemTemplate>

<DataTemplate>

<StackPanel Orientation="Horizontal">

<CheckBox IsChecked="{Binding IsSelected, Mode=TwoWay}" />

<TextBlock Text="{Binding OriginalString}" />

</StackPanel>

</DataTemplate>

</ListBox.ItemTemplate>

</ListBox>

<TextBox x:Name="resultTextBox" AcceptsReturn="True" TextWrapping="Wrap" ScrollViewer.VerticalScrollBarVisibility="Auto" Grid.Row="2" FontFamily="Consolas" Background="#B2FFFFFF" Margin="10,0,10,10">

<TextBox.Effect>

<DropShadowEffect/>

</TextBox.Effect>

</TextBox>

If you look at the DataTemplate for the ListBox, you’ll notice that the ChecBoxes IsChecked property is bound to the IsSelected property of our SelectableUri object. This is a simple boolean, which allows us to easily detect which Uri’s are selected:

var selectedUris = from uri in _uris

where uri.IsSelected

select uri;

_spider.GetUrlsFromEnumerableAsync(selectedUris.Cast<Uri>());

Summary

So what have we learned?

- We’ve seen how to build a two-way DOM bridge to do asynchronous operations in Javascript.

- We’ve interacted with JQuery to retrieve pages from the internet.

- We’ve build a simple HTML parser to extract the links from any HTML.

- And finally we’ve seen a simple databinding trick to easily make objects selectable.