Windows Phone 7 already has some speech features built into the system, for example voice commands that can be invoked by holding down the start button. As with many other features of this first generation of the platform, accessibility to these was extremely limited for app developers. Once again Windows Phone 8 not only heavily improves this situation, but it also adds a variety of completely new features that both developers and users will benefit from. In this article, I'll take a closer look at text to speech and its counterpart, speech to text (speech recognition).

Required Setup

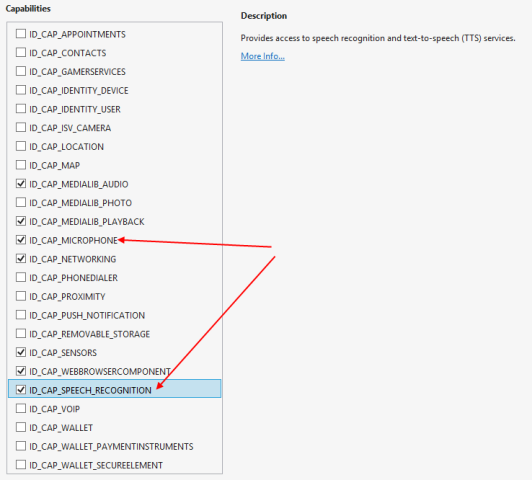

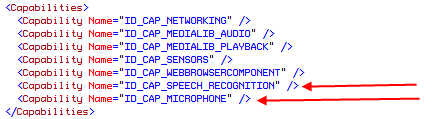

By now you're probably already used to some preparation that needs to be done before you can start using certain Windows Phone features in your apps. Speech is no different. If you want to use either text to speech or speech recognition, you need to specify the corresponding capability ID_CAP_SPEECH_RECOGNITION in your WMAppManifest.xml file, or you'll receive exceptions when you try to access any of the involved APIs. Since speech recognition also uses the microphone to record the user's voice, you need to additionally specify ID_CAP_MICROPHONE if you want to use it. As always, this can be performed using the visual manifest editor:

… or manually in the Xml file directly:

With that out of the way, let's start using the new features!

Text to Speech

Text to Speech, or TTS for short, is one of the features that can be used increadibly easily with just a few lines of code, but on the other hand can also become quite complex, solely depending on how far you as a developer are willing to take it. TTS is a built-in feature of Windows Phone 8, meaning you do not have to have an online connection for it to work; all speech synthesis is performed on the local device.

The fundamental class for TTS is located in the Windows.Phone.Speech.Synthesis namespace [1] and named "SpeechSynthesizer" [2]. You'll find that no matter what speech features you use on Windows Phone 8, the involved APIs always provide their functionality in asynchronous flavors only. This also is true for the synthesis of speech, even though it is performed locally. Luckily working with them still is extremely simple by making use of the async and await keywords in C#. The simplest sample to make the phone talk to you looks something like this:

var synthesizer = new SpeechSynthesizer();

await synthesizer.SpeakTextAsync("Hallo World!");

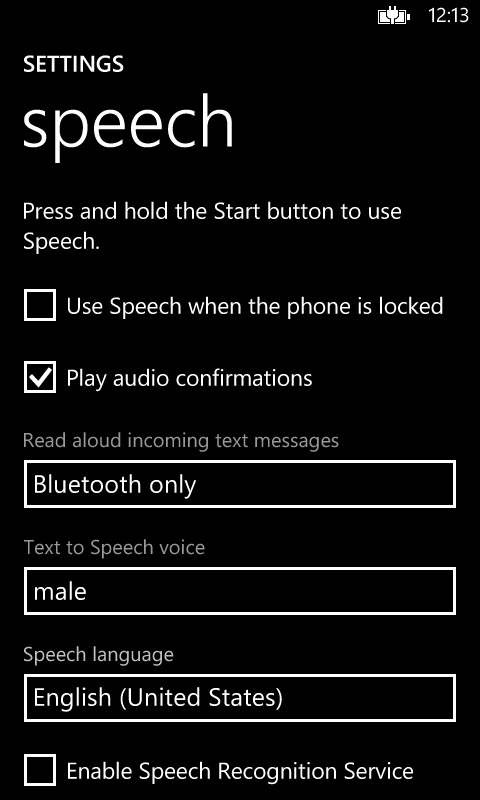

When you try this you will hear a pretty natural sounding voice. What voice in particular is used for speech in this case depends on the selection you have made on the "speech" settings screen of your phone:

Here you can switch between both male and female voices as well as choose between various languages that are supported. This already hints to a problem that you will inevitably run into: if you select "German" as language here, the English text "Hallo World!" will sound like spoken by the bad guys in an Indiana Jones movie. Although funny, it's probably not what you want to achieve in your app. To work around this, you have to use the "InstalledVoices" class [3] in code which provides access to both the default voice (configured in the above shown settings screen) as well as all available other voices on the phone. That way you can select whatever voice you intend to use, independently of the user's settings. Like this:

var synthesizer = new SpeechSynthesizer();

// select the US-English voice

var englishVoice = InstalledVoices.All

.Where(voice => voice.Language.Equals("en-US"))

.FirstOrDefault();

if (englishVoice != null)

{

// let the synthesizer use the English voice

synthesizer.SetVoice(englishVoice);

}

await synthesizer.SpeakTextAsync("Hallo World!");

The available speech synthesizer features don't stop at this point though. Windows Phone supports the so-called "Speech Synthesis Markup Language" (SSML) that allows you to control the very details and characteristics of synthetic speech in great detail. To this end, the synthesizer class has various methods that allow you to speak SSML from both local [4] and remote [5] locations, and some of the other members offered by the class also only make sense for SSML speech. SSML lets you control things like pitch and volume, pauses between words and dynamically switching between different voices, on a very granular level. If you want to learn more about this topic, a quick description of the features can be found on MSDN [6] whereas the full documentation is made available by the W3C [7].

Speech Recognition

The counterpart to text to speech is speech recognition, or speech to text. As you probably can imagine, this is a lot harder to achieve than synthesizing text to speech. The latter one "only" has to work out a way to create proper sounds from well-defined source material (text) that has comparably little stumbling blocks (like acronyms or names). Speech recognition on the other hand potentially has to work with millions of different voices, all with their own characteristics. In addition to inherent details like pitch and tone people around the world also show dramatic differences in pronounciation and emphasizing syllables, even when they formally speak the same language (think dialects).

Speech recognition for Windows Phone hence is mostly performed by remote systems, which require that some preprocessed audio material is uploaded and analyzed by specialized, high-performance systems running somewhere in the cloud. So, to use speech recognition, the device needs to have a working data connection.

The involved namespace with speech recognition is Windows.Phone.Speech.Recognition [8] and simply by taking a quick look at it you can see that there's a lot more types available than for synthesis here. However, most of the work still comes down to few fundamental classes, in particular the "SpeechRecognizer" [9] and "SpeechRecognizerUI" [10]. Both allow you to do speech recognition, but the latter provides the (customizable) default user interface a Windows Phone user most likely is familiar with. Again starting with the topic can be quite simple at first, with only a few lines of code:

// create and customize the UI

var recognizerUI = new SpeechRecognizerUI();

recognizerUI.Settings.ListenText = "I'm listening...";

recognizerUI.Settings.ExampleText = "Speech recognition on Windows Phone!";

// perform the recognition

var speechRecognitionUIResult = await recognizerUI.RecognizeWithUIAsync();

// make sure to check the status before processing any results

if (speechRecognitionUIResult.ResultStatus == SpeechRecognitionUIStatus.Succeeded)

{

ResultText.Text = speechRecognitionUIResult.RecognitionResult.Text;

}

The visual result of this looks like:

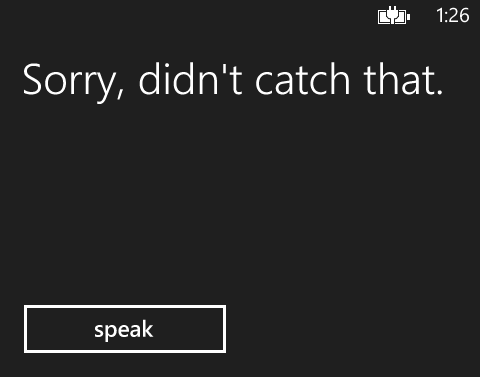

The default UI also has additional dialogs that are shown for example when the recognition failed…

… or was successful:

The behavior of that UI can be customized slightly by changing the settings on the "SpeechRecognizerUI" instance. For example, you can turn off the result screen, or that the recognized result is read back to the user.

One important detail is to always check the "ResultStatus" property of the returned "SpeechRecognitionUIResult" [11] because speech recognition can fail due to a variety of reasons:

- Another speech recognition is in progress (usually indicates a problem in your code)

- The phone's speech feature is otherwise in use, e.g. when a phone call comes in

- The user or navigating away from the app canceled the operation

- The user declined the speech privacy policy

The last point hints at the fact that the user has actively agree to using the speech recognition services. When you take a look at the above screenshot of the speech settings page again, you can see the corresponding check box at the bottom of the page there. If the user hasn't agreed to the policy and your app tries to use the feature, they are presented with an agreement screen first, and of course if they decline speech recognition will fail. Fortunately this has not to be done on an per-app basis, so if the user has accepted the policy any time before, your app won't run into this problem and your users won't be bothered with this again.

If the built-in UI doesn't fit your needs, you can create a complete custom UI for your app. To this end, the "SpeechRecognizer" class mentioned above provides recognition features that do not involve any predefined UI at all:

var recognizer = new SpeechRecognizer();

var speechRecognitionResult = await recognizer.RecognizeAsync();

ResultText.Text = speechRecognitionResult.Text;

When something goes wrong with the recognition in this case, exceptions are thrown. In addition, events exist on the recognizer that inform you e.g. about audio problems.

Not matter whether you use the built-in UI or a custom implementation, it's a good idea to take a close look at the "SpeechRecognitionResult" [12] which is directly returned by the speech recognizer and also available as property on the UI results returned by the speech recognizer UI. In particular, it indicates the confidence level by which the result could be determined. This includes a value of "Rejected" when the recognition service failed to guess any results. The confidence level is determined by an underlying floating point confidence score that you can also access here, if you really want to have more granularity. The result also allows you to access potential alternative results. For example, in some cases alternate interpretations of the analyzed audio may exist. The result then contains the most likely one, based on the confidence scores. If you want you can however access the other alternates too by using the "GetAlternates" method on the result.

Working with Grammars

Grammars are a way to tell the recognizer what kind of results you expect. By providing information about the expected structure and/or limiting the possible results to a pool of fixed values, you can improve the quality and accuracy of recognition significantly. Grammers are always used behind the scenes. Even if you do not specify any grammar explictly (like in all the examples above), Windows Phone uses a built-in default "dictation" grammar. The only other built-in grammar is "WebSearch" [13].

Grammars are maintained with the recognizer, and can be accessed by its "Grammars" collection property. This special collection type ("SpeechGrammarSet" [14]) has multiple custom methods to add grammars from local lists, the above mentioned pre-defined grammars as well as from URI locations. You can add multiple grammars to the recognizer at the same time and enable/disable them accordingly whenever you need them. The following example shows how to use local lists:

var recognizer = new SpeechRecognizer();

// create a grammar from a local list

var fruitPhrases = new[] { "apple", "banana", "gorilla" };

recognizer.Grammars.AddGrammarFromList("Fruits", fruitPhrases);

// preload the grammar

// this is done automatically by calling RecognizeAsync, but this

// gives you more explicity control over it, as it may cause a short delay

await recognizer.PreloadGrammarsAsync();

// recognize using the grammar

var speechRecognitionResult = await recognizer.RecognizeAsync();

When you try this code, you will see that it very accurately recognizes the words listed in the grammar, however you would have a very hard time if you tried to make it recognize "mango", for example. So this is a simple way of making sure that you receive only results meaningful to your app, or nothing if recognition fails. More on working with grammars that way can be found on MSDN [15][16].

Of course limiting your app to a fixed set of values only works for simple cases. Grammars are a lot more powerful than that. Windows Phone supports SRGS – "Speech Recognition Grammar Specification" – which is another W3C standard [17]. This allows you to specify rules about word ordering, combination of lists, filtering out unwanted "noise" and a lot more. MSDN has an introduction to this complex topic for Windows Phone 8, including additional links to more references and sample How-tos [18].

Testing Speech

A pleasant surprise with all the speech features is that you can easily test them in the emulator too. For text to speech this seems obvious. Because the emulator images contain a fully working Windows Phone system, there's really no reason why this shouldn't work there too. For speech recognition however more user interaction is required, in particular providing audio input using a microphone. To this end, a microphone connected to the development computer can be used to provide the necessary input for speech recognition without any further setup.

For more complex scenarios, and especially for test cases that work reproducibly, this still may not be sufficient for you. At the moment, I am not aware of any built-in possibilities to provide e.g. pre-recorded audio files as input for testing, so you may need to build a custom solution for this (if you know a simpler way, let me know).

Conclusion

My personal perception was that on Windows Phone 7 speech features were a nice embellishment, but not many people actively used them on a daily basis. With Windows Phone 8, not only have the features improved and been extended dramatically, developers now also have great access to them through sophisticated APIs, so I expect to see a lot more use of speech in the future. Let's see how upcoming apps will surprise us.